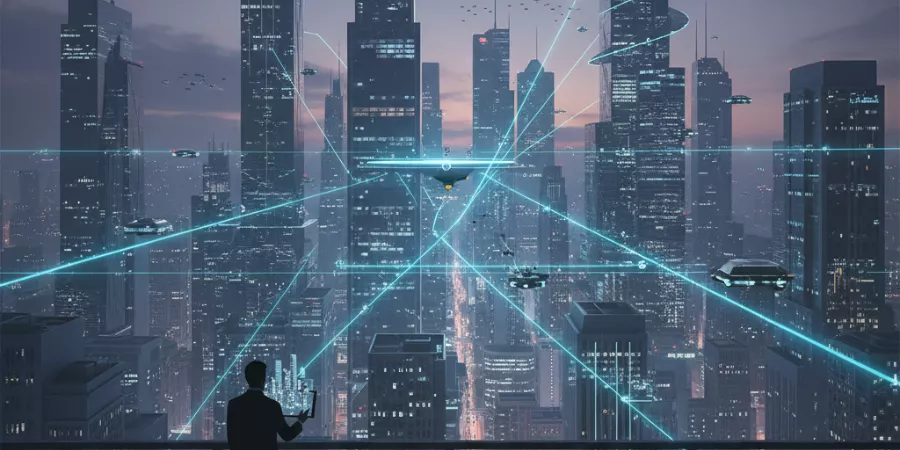

Your eyes can no longer be trusted but Google Photos wants to help. The cloud storage giant just unveiled an AI-powered image attribution feature designed to identify deepfakes and manipulated photos threatening to undermine visual truth across digital platforms. As synthetic media created by artificial intelligence becomes increasingly sophisticated and accessible, Google Photos is deploying advanced algorithms that analyze lighting inconsistencies, shadow anomalies, and facial feature irregularities to flag potentially altered images. With deepfakes evolving from novelty technology to genuine misinformation weapons capable of swaying elections and destroying reputations, this proactive defense mechanism represents a critical if imperfect tool in the escalating battle between authentic reality and artificially generated deception.

The Deepfake Threat: Why Image Authentication Matters Now

Deepfakes have transcended their origins as internet curiosities to become genuine threats to information integrity.

Recent years have witnessed deepfake technology’s democratization. What once required specialized expertise and expensive equipment now operates through consumer-accessible apps and web platforms capable of generating convincing synthetic media in minutes. This accessibility explosion creates proliferation risks across multiple threat vectors:

Political misinformation: Fabricated videos of politicians making inflammatory statements or engaging in compromising behavior can spread virally before fact-checkers debunk them with initial impressions persisting despite later corrections.

Financial fraud: Deepfake audio and video enable sophisticated impersonation schemes, with criminals mimicking executives’ voices to authorize fraudulent wire transfers or creating fake video calls to manipulate stock prices.

Reputation destruction: Individuals face harassment through non-consensual intimate imagery or fabricated compromising content designed to damage personal and professional credibility.

Erosion of visual evidence: As synthetic media becomes commonplace, authentic photographic and video evidence loses presumptive credibility creating a “liar’s dividend” where genuine documentation can be dismissed as fabricated.

Google Photos’ image attribution feature addresses this ecosystem challenge by providing accessible verification tools integrated directly into platforms where billions of images are stored, viewed, and shared daily.

How Google’s AI Detection Actually Works

Google Photos leverages advanced AI algorithms to analyze images for telltale manipulation signatures that human eyes typically miss.

The system examines multiple forensic indicators:

Lighting inconsistencies: Natural photographs exhibit coherent light sources with predictable shadow patterns and highlight distributions. Manipulated images often betray themselves through incompatible lighting on different elements a face illuminated from the left composited onto a body lit from the right, for example.

Shadow anomalies: Shadows provide mathematical constraints that deepfake generators sometimes violate. Incorrect shadow directions, missing shadows where they should exist, or shadows with implausible properties indicate potential manipulation.

Facial feature irregularities: AI-generated or face-swapped images may exhibit subtle distortions in facial geometry, unnatural skin textures, inconsistent aging markers, or artifacts around boundaries where synthetic faces merge with original images.

Compression artifacts: Different image generation and editing processes leave distinct compression signatures. Analyzing these patterns can reveal whether an image originated from a camera sensor or was synthesized or heavily edited.

Metadata analysis: Examining EXIF data, file history, and editing timestamps provides additional context about an image’s provenance and modification history.

When the system detects potential manipulation, users receive notifications or labels alerting them that an image may have been altered not a definitive verdict, but a prompt for additional scrutiny before sharing or trusting the content.

The Limitations: Why This Isn’t a Silver Bullet

Google Photos explicitly acknowledges that this feature “is not foolproof” an honest admission reflecting the arms race dynamics between detection and generation technologies.

Adversarial evolution: As detection systems improve, deepfake generators adapt to evade new detection methods. This creates a perpetual cat-and-mouse dynamic where neither side achieves permanent advantage.

False positives: Legitimate photographs exhibiting unusual but authentic characteristics unconventional lighting from multiple sources, extreme post-processing, or edge cases in natural photography may trigger manipulation warnings despite being genuine.

False negatives: Sophisticated deepfakes specifically engineered to pass detection algorithms will inevitably slip through, particularly as generative AI models train on detection methods and learn to avoid telltale signatures.

Context-dependent accuracy: Detection effectiveness varies across image types, manipulation techniques, and quality levels. High-resolution, carefully crafted deepfakes pose greater challenges than low-quality synthetic media.

Platform limitations: The feature only analyzes images within Google Photos offering no protection against manipulated content encountered on social media, news websites, or messaging platforms where most viral misinformation spreads.

Google’s transparency about these limitations is commendable, explicitly stating that “it is always important to be critical of the information you encounter online.” The feature augments rather than replaces human judgment and media literacy.

Integration Into the Google Photos Ecosystem

The strategic deployment within Google Photos a platform storing and organizing billions of images for hundreds of millions of users provides massive reach for the attribution technology.

Users already trust Google Photos as their primary photo repository, making it a natural environment for authentication tools. Unlike standalone verification websites requiring users to proactively seek fact-checking, integrated detection operates passively and automatically analyzing images as users browse their libraries or receive shared albums.

This seamless integration reduces friction that typically prevents security tool adoption. Users don’t need to remember to verify images, understand technical authentication processes, or navigate separate verification platforms. The system works invisibly, surfacing warnings only when potential issues arise.

However, this integration also creates dependency risks. Users may develop false confidence in Google’s detection capabilities, assuming flagged images are definitely fake and unflagged images are certainly authentic neither of which is guaranteed given the technology’s inherent limitations.

Industry Context: Google’s Broader AI Safety Strategy

The image attribution feature doesn’t exist in isolation it represents one component of Google’s comprehensive approach to AI-generated content challenges.

Google has been pioneering content authenticity initiatives including:

C2PA support: Implementation of Coalition for Content Provenance and Authenticity (C2PA) standards that embed cryptographic metadata documenting image origins and editing history.

SynthID watermarking: Development of invisible watermarks for AI-generated images that survive compression, cropping, and other modifications while remaining imperceptible to human viewers.

Search integration: Labeling AI-generated content in Google Search results and Images to provide context about content origins.

YouTube policies: Requiring disclosure when realistic content has been altered or synthetically generated, particularly for material addressing sensitive topics.

These parallel initiatives reflect recognition that deepfake challenges require multi-layered defense strategies rather than singular technical solutions. Detection algorithms, provenance tracking, watermarking, disclosure requirements, and user education must work synergistically to address the multifaceted threat.

What Users Should Do: Beyond Automated Detection

While Google Photos’ attribution feature provides valuable assistance, effective deepfake defense requires active user participation.

Maintain healthy skepticism: Treat sensational, emotionally provocative, or politically convenient images with particular caution these characteristics make content viral but also make it attractive for manipulation.

Verify through multiple sources: Cross-reference surprising images against reputable news outlets, reverse image search tools, and fact-checking organizations before sharing.

Examine context critically: Consider whether image content aligns with known information about subjects, locations, and events. Implausible scenarios warrant additional scrutiny regardless of visual quality.

Understand attribution limitations: Recognize that absence of manipulation warnings doesn’t guarantee authenticity sophisticated deepfakes may evade detection.

Report suspected deepfakes: Use platform reporting mechanisms to flag suspected synthetic media for human review and potential removal.

Media literacy remains the ultimate defense against visual misinformation. Technology can assist, but cannot substitute for critical thinking and verification discipline.

The Arms Race Continues: What Comes Next

Google’s commitment to “staying ahead of the curve and developing new tools” acknowledges that this feature represents an evolutionary step rather than final solution.

Future developments will likely include:

- Real-time video deepfake detection for streaming content

- Integration with messaging platforms for pre-sharing verification

- Blockchain-based provenance tracking creating immutable image histories

- Collaborative verification networks pooling detection capabilities across platforms

- Adversarial training using latest deepfake techniques to strengthen detection algorithms

The fundamental challenge persists: as long as generative AI improves, detection must evolve correspondingly. Google Photos’ image attribution feature won’t end deepfakes but it provides meaningful friction that may slow misinformation’s spread while buying time for society to develop cultural and institutional responses to the synthetic media age.

In a world where seeing is no longer believing, tools that help distinguish real from fabricated become essential infrastructure for digital citizenship.